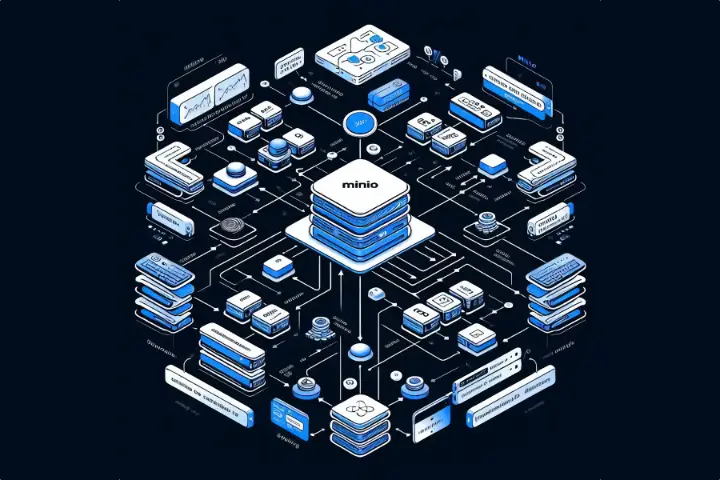

In my previous MinIO guides I have gone through setting up MinIO, enabling an external credential provider, adding replication, and using Prometheus for advanced reporting. All this was done with a publicly accessible MinIO web interface. Having gone through all this however, I then decided to put MinIO behind a Tailscale VPN to further secure my data.

I’ve recently begun using Tailscale for my remote access, and given every system I use with MinIO is part of my Tailscale, I figured that leveraging the VPN could only improve my security.

(Continue reading…)The following guide will cover the steps required to get MinIO Monitoring working with Prometheus to extend the amount of reporting data for your instance.

This guide assumes that MinIO is deployed via Docker, and we will use docker to deploy new Prometheus instances per MinIO site. For information on setting MinIO up initially see my previous MinIO guides.

For this guide we will be using token (called Bearer Token) based authentication to the MinIO reporting. The process can be drastically simplified by making monitoring data publicly available, but that won’t be covered in detail here. As per previous guides, I will also look to minimise (avoid) use of the root user, with a dedicated monitoring user and permissions to be configured.

(Continue reading…)Since setting up MinIO I’ve been keen to take a stab at setting up site to site replication. Right now I use MinIO for backups, so replication is not hugely necessary as MinIO based storage is only one of multiple layers of redundancy I have, but it was an interesting academic exercise.

This guide will be a bit lighter touch than the guides Configuring MinIO Part 1 (Docker with Nginx Reverse Proxy) and Part 2 (OpenID Connect) that I previously published. The majority of legwork in getting replication going is repeating build covered in those guides. Enabling replication largely just requires linking a second built instance of MinIO up to the first. There are some things to watch out for however.

(Continue reading…)After setting up MinIO on a public facing web server, one of the first things you will want to do is to secure the web frontend. Out of the box MinIO does not support multi factor authentication. Instead, the product allows you to use a third party authentication service. In this guide we’ll explore the process to do this with Google Cloud Apps and OpenID Connect. It’s relatively painless and I believe should all be doable under Google Clouds free tier.

(Continue reading…)Recently I had been looking at options for storage of PC backups. Currently I use the rather excellent Mac app, Arq, which I use to backup to a local server (via a network share). I also have the software set to do a secondary backup via SFTP to a cloud server.

The limitation with this second backups use of SFTP as a backup protocol is that it doesn’t allow for any sort of routine processing of files from the server itself. For cold storage this is fine. However when I need to validate that my backups are correct, which is scheduled to occur every month or two, the comparison of the checksum for a cloud copy compared to a local copy has to be performed on my local PC, meaning the entire online backup has to be downloaded. This has a significant time implication for verifying backups and also consumes data allowances where a destination measures bandwidth.

MinIO is a free open blob storage solution that implements S3 compatible storage (think Amazon AWS S3). Among other capabilities such as versioning and retention policies, the product allows check-sums for files in a data store to be validated from the server itself. When comparing a backed up file in the cloud to the local copy, only the checksums themselves have to travel over the network, not the entire file. This saves a lot of bandwidth and for my use case this is perfect. Using MinIO over SFTP avoids time waiting for files to download and keeps bandwidth as a minimum whenever I do monthly checks.

(Continue reading…)